Parameter Store at Edge

Continuing on the Serverless GraphQL at Edge journey, one of the optimizations to do is having a configuration variable using Systems Manager (SSM) Parameter Store. One can only create a Parameter Store in a specific region but working with Lambda@Edge means these Parameter Stores may not be in the same region where the lambda is active. Retrieving the Parameter Store in another region will cause additional latency.

That is why a replication mechanism is needed to make use of the Parameter Store within the region where the Lambda@Edge is.

The Different Attempts

There are several attempts that were done to finally come up with a good solution for the SSM Parameter Store replication. Below will explain the advantages and disadvantages of these attempts.

EventBridge

The first attempt was to use EventBridge. Whenever there is a creation, update, or deletion of a Parameter Store in a specified region where it is set up, it will replicate them to other regions. This is one of the advantages of EventBridge as it works with events where one can set the source of the event from the SSM Parameter Store. Also, setting up an event pattern in the Event Rule is possible where it will apply the rule when such pattern is met. But the problem is, how will it know which region to replicate the Parameter Store? That is where it will end up setting up rules to all regions in which it defeats the purpose of replication on-the-fly.

Viewer Request

To be able to know which region to replicate, one can use another Lambda@Edge and have it as the Viewer Request handler. Its responsibility is to create the necessary SSM Parameter Store within the region when it does not exist. The advantage of this approach is that the logic is separated from the Origin Request handler and the latter will only focus on the core functionality. Unfortunately, it adds up to unnecessary overhead that results in additional latency especially with cold start. Below is the result of the observation:

| Viewer Request | Origin Request | Latency (user receives the response) | |

| Cold Start (Parameter Store not yet in the edge location) | ~1.7s | ~1.7s | ~5s |

| Warmed Up | ~500ms | ~500ms | ~1.1s |

Here’s a sample of Lambda@Edge that works with serverless-offline-edge-lambda: ssm-creation-edge-viewer

Based on this result especially during Cold Start, it seems that Cloudfront introduces some overhead when there are several handlers for different events (viewer request and origin request). Also, these handlers are executed synchronously.

Because of this, it is best to keep the logic within the Origin Request handler and below shows the observation result:

| Origin Request | Latency (user receives the response) | Init | |

| Cold Start (Parameter Store not yet in the edge location) | ~2.6s | ~3.7s | ~750ms |

| Cold Start (Parameter Store is in the edge location) | ~2s | ~3s | ~700ms |

| Warmed Up | ~500ms | ~550ms | NA |

Comparing the two observations, there is a big difference in terms of latency. There is an overhead eliminated when we only have the Origin Request handler to manage the Parameter Store retrieval from the source and creation within the edge location. Also during warmed up, the latency is mostly from Origin Request processing time. Although there is not much gain when we have the Parameter Store within the region vs accessing it from the source region during cold start, but having a few milliseconds improvement is already good and it might be useful later.

Parameter Store Cleanup

One thing to think about is not to pollute the edge regions with unused Parameter Store as these are not managed in the Infrastructure Code (CDK). The SSM Parameter Store has this expiration setting feature. Unfortunately, this feature is only available in the Advanced Tier and this means that it is not free. Sticking to the Standard Tier, the only way is to introduce a scheduled task or job for Parameter Store cleanup.

There are 2 options where one can create a scheduled job: CloudWatch Rule and EventBridge Rule.

To give further details, EventBridge is a serverless event bus that is built on top of the CloudWatch Events API and it has additional features and one of them is the ability to create custom event buses. So, in terms of creating a scheduled job, there is no difference between CloudWatch Rule and EventBridge Rule.

Here’s a sample of EventBridge Rule declaration in infrastructure code (CDK): EventBridge Scheduler declaration

The sample solution will be the EventBridge rule, where the rule is scheduled to execute, for example, every 12 hours. Cleaning it up at every X time of the day is to make sure that these Parameter Stores are deleted whenever they are not used or needed anymore and also allows the edge region to keep an up-to-date value of the Parameter Store.

The Final Solution

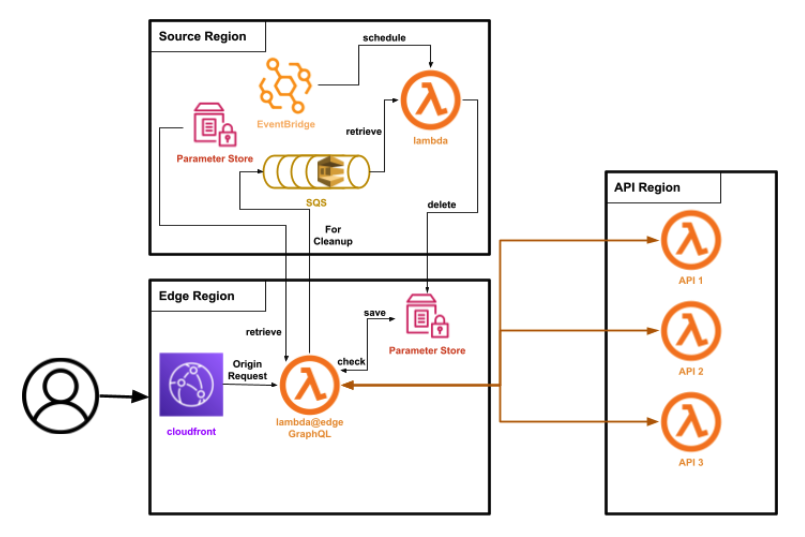

Based on the experiments done, the following are the components needed to be able to replicate the SSM Parameter Stores in the edge region:

- A logic in the Lambda@Edge that will:

- check if the parameter store already exist in the edge region

- if it does not exist, retrieve the parameter store from the source region

- save the parameter store in the edge region’s SSM

- send an entry to the SQS for cleanup later

- A scheduled job using EventBridge that will trigger a Lambda function

The Lambda function will retrieve all the messages from SQS queue and delete all the SSM Parameter stores on the edge region

Below is how the overall infrastructure looks like:

Here is the version of the codebase: graphql-as-bff-v1.1.0